People recognition with active lightning

The active lightning functionality enables to inform the operator with LED stripes in the field of view if a person is in the vicinity of the machine. The person is detected around the machine and the solution highlights with color coding where a person in in relation to the machine and how far away that person is.

Snow Grooming with Laser Projection

The use case 1 demonstrator from partner Prinoth AG has been equipped with a laser projector, enabling to project additional information using XR in front of the snow groomer. It projects defined graphics, such as arrows, velocity information, warnings, etc. at specific locations in the environments. To achieve this, we relay on localization and environmental reconstruction. Localization is handled using GPS data from the vehicle CAN bus, while the reconstruction process if implemented in Unity.

The video shows results of many intense integration days and spending late evenings on the slopes of the mountains, demonstrating the results.

Third XR prototype of remote operation of reachstacker

The following updates have been integrated:

- Camera system to enable flexible workspace layouts. Each camera preview window can be expanded and resizeed to take up to one-third of the screen space

- Wheel direction visualization using AR

- Better hand tracking for controlling camera views; also there is feedback for users of hand tracking

- Simulated lidar view

- Color coded distance lines to container corners to provide visual hints to operator

- Weather condition simulation: rainy foggy and snow conditions

- Haptic feedback

In the next phase of developed, the most suitable features identified through the three iteration cycles will be carefully selected and implemented into the actual remote operation station. This phase will involve further refinement of the XR interface and interaction methods based on user feedback

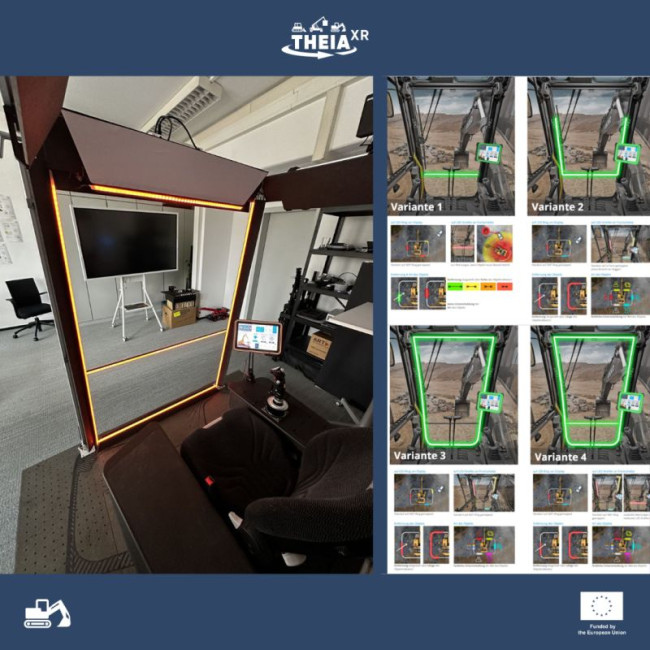

Cabin Design

Recently, there was a first workshop with Professur für Baumaschinen and Industrial Design Engineering | td on implementing cabin illumination into the HMI.

Light indicators around the front window and the main display are intended to inform the operator about the direction, distance, and collision risk of obstacles near the machine. Using our modular and multimodal prototyping cab, it was exciting to try out different feedback setups. The colleagues tested different mappings using color, animations, and position of the lights to convey information, aiming to improve perception, attention control, and distraction.

Credits to Richard Morgenstern, who implemented the HMI setup and developed the various concepts as part of his diploma thesis.

Second XR prototype of remote operation for collecting user feedback

The use case target is KALMAR's remote controlled reach stacker, which is handling containers in a harbour. In the first setup, operators operator with their eyes on video screens and remotely control these machines.

The second prototype focuses on a simulated 360° camera view and embedded augmented content, which was highlighted several times during the first evaluation. Augmented reality content was based on several available sensors and other data.

The user is now able to choose between four different camera views:

- cabin view / driver's perspective

- spreader top-down view

- rear view / counterweight, and

- drone / bird's eye view

The visualization was done with XR powerwall.

The second user evaluation was held at VTT's XR lab with Kalmar personnel end of November executing simple pick and place tasks. To assess the usability of the prototype, users were asked to fill in a System Usability Scale (SUS) leading to a score of 70, which means usability was evaluated as acceptable given feedback for further enhancement.

First XR prototype of remote operation for collecting user feedback

Project partner VTT has been developing a Mixed Reality application for the EU-funded THEIA-XR project, that aims to enhance operators' awareness when controlling off-highway machinery.

The demo makes use of a Varjo XR-3 headset to show real-world objects in a virtual scene, in which the user can operate a realistic representation of a Reach Stacker from Kalmar. Thanks to the Ultraleap sensor on the XR-3, the user can interact with a virtual hand menu to freely move around the scene, while a real joystick controller lets them operate the Reach Stacker.

VTT had the chance to have a first user test and collect a lot of useful feedback, which is the main driver of the redesign process that has already started.